* feat: add agents/actions/write_markdown * [ADD] add evaluation result of base model on 5/10 epochs * Rename mother.json to mother_v1_2439.json * Add files via upload * [DOC] update README * Update requirements.txt update mpi4py installation * Update README_EN.md update English comma * Update README.md 基于母亲角色的多轮对话模型微调完毕。已上传到 Huggingface。 * 多轮对话母亲角色的微调的脚本 * Update README.md 加上了王几行XING 和 思在 的作者信息 * Update README_EN.md * Update README.md * Update README_EN.md * Update README_EN.md * Changes to be committed: modified: .gitignore modified: README.md modified: README_EN.md new file: assets/EmoLLM_transparent.png deleted: assets/Shusheng.jpg new file: assets/Shusheng.png new file: assets/aiwei_demo1.gif new file: assets/aiwei_demo2.gif new file: assets/aiwei_demo3.gif new file: assets/aiwei_demo4.gif * Update README.md rectify aiwei_demo.gif * Update README.md rectify aiwei_demo style * Changes to be committed: modified: README.md modified: README_EN.md * Changes to be committed: modified: README.md modified: README_EN.md * [Doc] update readme * [Doc] update readme * Update README.md * Update README_EN.md * Update README.md * Update README_EN.md * Delete datasets/mother_v1_2439.json * Rename mother_v2_3838.json to mother_v2.json * Delete datasets/mother_v2.json * Add files via upload * Update README.md * Update README_EN.md * [Doc] Update README_EN.md minor fix * InternLM2-Base-7B QLoRA微调模型 链接和测评结果更新 * add download_model.py script, automatic download of model libraries * 清除图片的黑边、更新作者信息 modified: README.md new file: assets/aiwei_demo.gif deleted: assets/aiwei_demo1.gif modified: assets/aiwei_demo2.gif modified: assets/aiwei_demo3.gif modified: assets/aiwei_demo4.gif * rectify aiwei_demo transparent * transparent * modify: aiwei_demo table--->div * modified: aiwei_demo * modify: div ---> table * modified: README.md * modified: README_EN.md * update model config file links * Create internlm2_20b_chat_lora_alpaca_e3.py 20b模型的配置文件 * update model config file links update model config file links * Revert "update model config file links" --------- Co-authored-by: jujimeizuo <fengzetao.zed@foxmail.com> Co-authored-by: xzw <62385492+aJupyter@users.noreply.github.com> Co-authored-by: Zeyu Ba <72795264+ZeyuBa@users.noreply.github.com> Co-authored-by: Bryce Wang <90940753+brycewang2018@users.noreply.github.com> Co-authored-by: zealot52099 <songyan5209@163.com> Co-authored-by: HongCheng <kwchenghong@gmail.com> Co-authored-by: Yicong <yicooong@qq.com> Co-authored-by: Yicooong <54353406+Yicooong@users.noreply.github.com> Co-authored-by: aJupyter <ajupyter@163.com> Co-authored-by: MING_X <119648793+MING-ZCH@users.noreply.github.com> Co-authored-by: Ikko Eltociear Ashimine <eltociear@gmail.com> Co-authored-by: HatBoy <null2none@163.com> Co-authored-by: ZhouXinAo <142309012+zxazys@users.noreply.github.com>

2.9 KiB

2.9 KiB

1. Deployment Environment

- Operating system: Ubuntu 22.04.4 LTS

- CPU: Intel (R) Xeon (R) CPU E 5-2650, 32G

- Graphics card: NVIDIA RTX 4060Ti 16G, NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2

- Python 3.11.5

2. Default Deployment Steps

-

- Clone the code or manually download the code and place it on the server:

git clone https://github.com/SmartFlowAI/EmoLLM.git

-

- Install Python dependencies:

# cd EmoLLM

# pip install -r requirements.txt

-

- Download the model files, either manually or by running the download_model.py script.

- 3.1. Automatically download the model file and run the script:

# python download_model.py <model_repo>

# Run the web_demo-aiwei.py script to run the model repository at ajupyter/EmoLLM_aiwei, ie:

# python download_model.py ajupyter/EmoLLM_aiwei

# Run the web_internlm2.py script to run the model repository at jujimeizuo/EmoLLM_Mode, ie:

# python download_model.py jujimeizuo/EmoLLM_Model

# This script can also be used to automatically download other models. This script only supports automatic download of models from openxlab platform, models from other platforms need to be downloaded manually. After successful download, you can see a new model directory under EmoLLM directory, i.e. the model file directory.

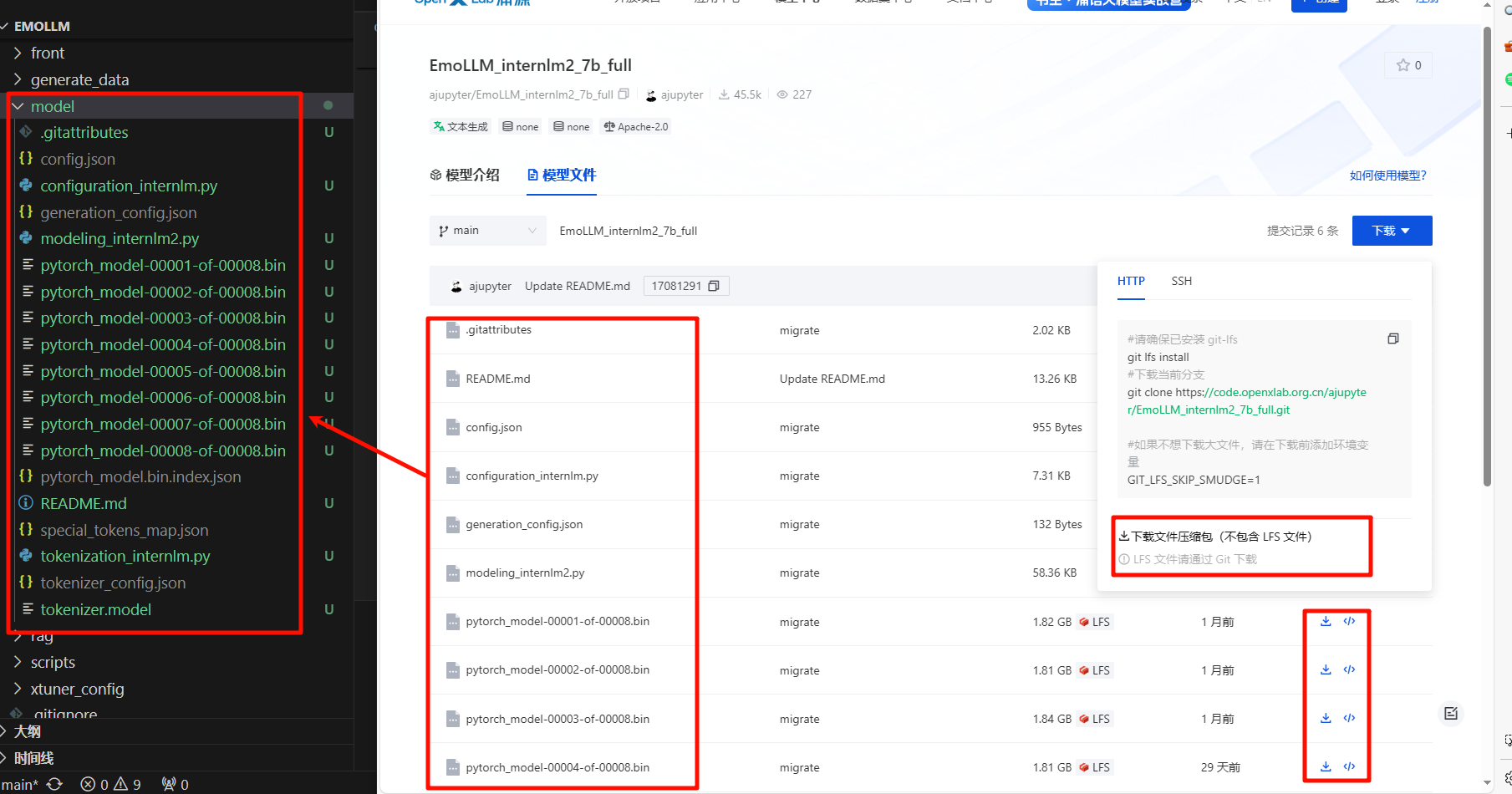

- 3.2. To download the model file directory manually, go to openxlab, Huggingface, etc. to download the complete model directory file, and put all the files in the

EmoLLM/modeldirectory. Note that the LFS file (e.g. pytorch_model-00001-of-00008.bin) is not downloaded when the model file directory is packaged for download, so you need to download the full LFS file one by one.

-

- Run the script, app.py is only used to call web_demo-aiwei.py or web_internlm2.py file, you can download the model file of the corresponding script for whichever script you want to run, and then comment the other script in app.py. Then run the script:

python app.py

-

- After running app.py, you can access the model's web page through your browser at the following address: http://0.0.0.0:7860. You can modify the app.py file to change the web page access port to experience the model normally. If you are deploying on a server, you need to configure local port mapping.

-

- Use of other models, EmoLLM offers several versions of the open source model, uploaded to openxlab、Huggingface, and other platforms. There are roles such as the father's boyfriend counselor, the old mother's counselor, and the gentle royal psychiatrist. There are several models to choose from such as EmoLLM_internlm2_7b_full, EmoLLM-InternLM7B-base-10e and so on. Repeat steps 3 and 4 to manually or automatically download the model in the

EmoLLM/modeldirectory and run the experience.

- Use of other models, EmoLLM offers several versions of the open source model, uploaded to openxlab、Huggingface, and other platforms. There are roles such as the father's boyfriend counselor, the old mother's counselor, and the gentle royal psychiatrist. There are several models to choose from such as EmoLLM_internlm2_7b_full, EmoLLM-InternLM7B-base-10e and so on. Repeat steps 3 and 4 to manually or automatically download the model in the